Clustering: Same ZFS Pool on All Nodes

Example Environment

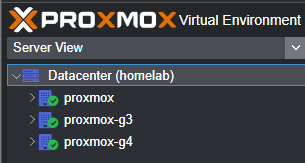

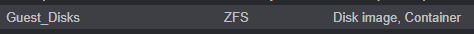

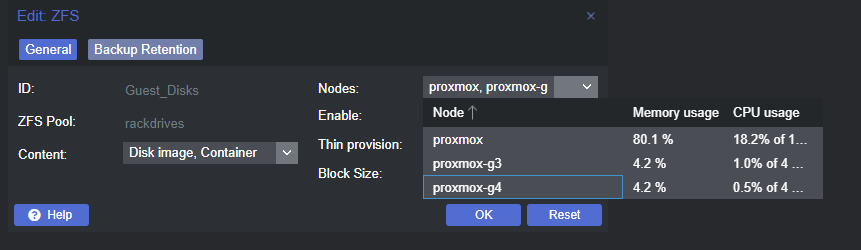

I keep my VM and container guest disks stored on a ZFS share attached to the ZFS pool, rackdrives. Now that I have created a cluster, I want to be able to live migrate VMs and replicate containers between all three nodes.

When I created a cluster -- attaching two more nodes -- these are blank environments with no ZFS pool or shares configured.

Creating the ZFS Pool

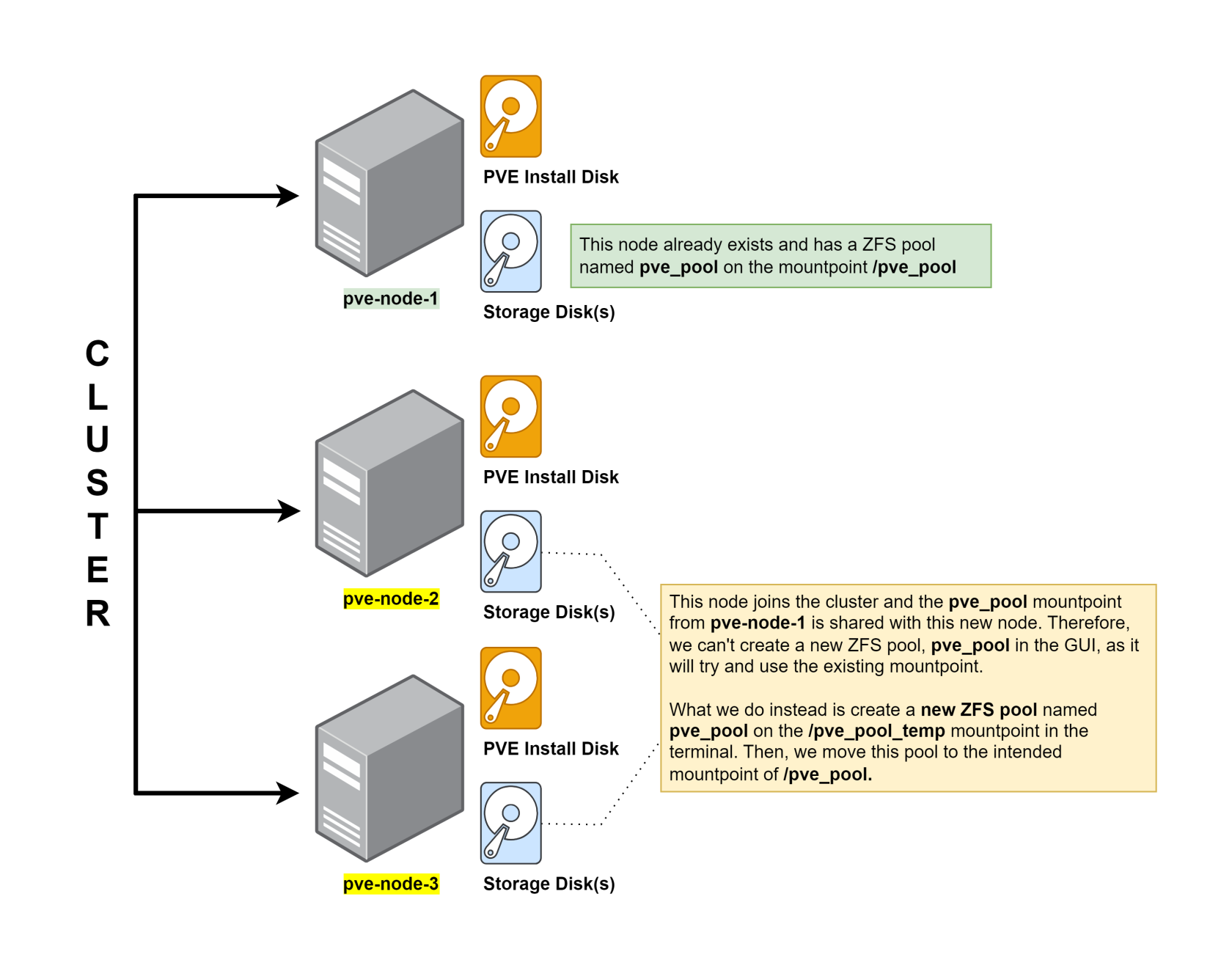

The Problem

Since the two new nodes, proxmox-g3 and proxmox-g4 do not have a ZFS pool called rackdrives, when the existing node, proxmox shares its storage with the two new nodes, the Guest_Disks storage target will fail to resolve, since the ZFS pool rackdrives does not exist on the two new nodes.

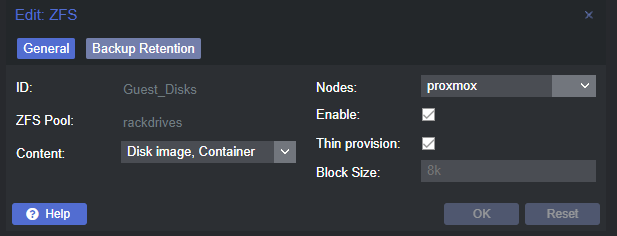

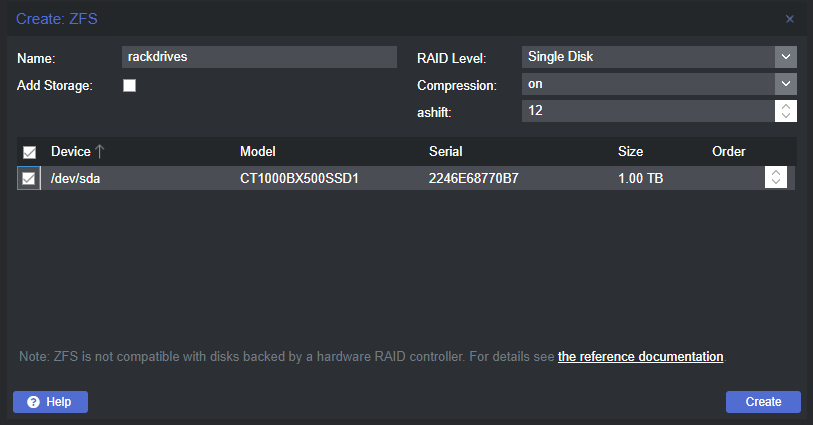

If I go through the GUI to add a zpool to proxmox-g3 -- for example -- I could fill out the form like this:

The only problem with this is that the pool rackdrives already exists on the node proxmox, and will throw an error when trying to create the ZFS pool.

mountpoint '/rackdrives' exists and is not empty

use '-m' option to provide a different default

TASK ERROR: command '/sbin/zpool create -o 'ashift=12' rackdrives /dev/disk/by-id/ata-CT1000BX500SSD1_2246E68770B7' failed: exit code 1That's because the mountpoint /rackdrives is already shared with the cluster via data in /etc/pve/storage.cfg, because everything in /etc/pve is replicated across the cluster.

The Solution

Use a different mountpoint when creating the ZFS pool by creating it in the shell.

# Create the ZFS pool with a dummy mountpoint

zpool create -m /rackdrives-g3 -o ashift=12 rackdrives /dev/disk/by-id/ata-CT1000BX500SSD1_2246E68770B7

# The /rackdrives mountpoint was pushed to the new node at clustering

# Change to this mountpoint

zfs set mountpoint=/rackdrives rackdrives

# Enable compression

zfs set compression=lz4 rackdrives

# Remove the dummy mountpoint

rm -rf /rackdrives-g3Finally, go to the Datacenter view and click Storage. Now, you can safely indicate that the Guest_Disks target can be shared on the rackdrives pool on all nodes. This will allow live migration to complete without any issues.